The traditional waterfall model of software development is outdated and the agile methodology is rapidly taking its place. Together with DevOps, agile helped development and operations teams to together create a robust and predictable software delivery. As part of this transition and in using the right set of tools, advanced methodologies such as continuous integration (CI) and continuous delivery (CD) lead to an efficient delivery pipeline all the way from commit to test to deployment.

The move to DevOps—whether in a startup or a large enterprise—reduces the incidents of human error. This model allows R&D to maintain quality, while expediting the end-to-end delivery process. In this article, we will cover DevOps methods, including automation, as well as provide actual tips and tools to help you create your own delivery pipeline.

The 4 Stages of the CI/CD Pipeline

Consider a use case in which an organization is building a product which has multiple remote teams working on different microservices. Each team has their own service roadmap and delivery plan. For the integration to lead to a robust delivery, a CI/CD pipeline is a must. With a CI/CD pipeline, whenever the development team makes a change in the code, the automation in the pipeline leads to automated compilation, building, and deployment on various environments.

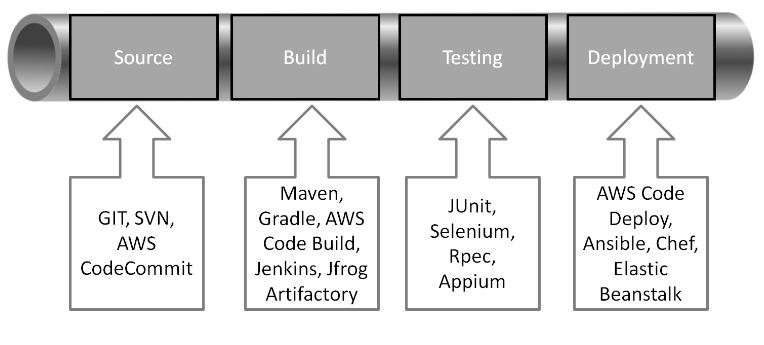

The CI/CD pipeline can be broken down into four stages of an application life cycle. Each stage will play a key role in CI/CD. The available tools will help achieve consistency, speed, and quality of delivery. As shown below, the four stages are Source, Build, Testing, and Deployment.

1. Source

An organization stores application codes in a centralized repository system to support versioning, tracking changes, collaborating, and auditing, as well as to ensure security and maintain control of the source code. A key element in this stage is the automation of the version control. We can consider it the baseline unit of the CI phase. Automation involves monitoring the version control system for any changes and triggering events, such as code compilation and testing.

Let’s say you are working on a Git repository along with other team members. Whenever a change is committed to the repository, the Git webhook triggers a notification for the Jenkins job that compiles the code and performs unit test cases. If there is a failed compilation or a failed test case, an email is automatically sent to the entire developer group.

2. Build

This is the key stage of application development, and when completely automated, it allows the dev team to test and build their release multiple times a day. This stage includes compilation, packaging, and running the automated tests. Build automation utilities such as Make, Rake, Ant, Maven, and Gradle are used to generate build artifacts.

The build artifacts can then be stored into an artifact repository and deployed to the environments. Artifact repository solutions such as JFrog Artifactory are used to store and manage the build artifacts. The main advantage of using an artifact repository is that they make it possible to revert back to a previous version of the build, if that’s ever necessary. Highly available cloud storage services such as AWS S3 can also be used to store and manage build artifacts. Running on AWS for build services, one should consider AWS CodeBuild. Jenkins, one of the most popular open-source tools, can be used to coordinate the build process.

3. Testing

Automated tests play an important role in any application development-deployment cycle. The automated tests required can be broken down into three separate categories:

- Unit test: Developers subdivide an application into small units of code and perform testing. This test should be part of the build process and can be automated with tools like JUnit.

- Integration test: In the world of microservices of distributed applications, it is important that separate components should work when different modules of an application are integrated. This stage may involve testing of APIs, integration with a database, or other services. This test is generally part of the deployment and release process.

- Functional test: This is end-to-end testing of application or product, generally performed on staging servers as part of release process. It can be automated with tools like Selenium to efficiently run across different web browsers.

To perform a streamlined test, framework tools such as JMeter and Selenium can easily be integrated with Jenkins to automate functional testing as part of end-to-end testing.

4. Deployment

Once the build is done and the automated tests for the release candidate completed, the last stage of the pipeline is auto deployment of the new code to the next environment in the pipe. In this stage, the tested code is deployed to staging or a production environment. If the new release passes all the tests at each stage, it can be moved to the production environment. There are various deployment strategies such as blue-green deployment, canary deployment, and in-place deployment for deploying on a production environment:

- In a blue-green deployment, there will be multiple production environments running in parallel. The new “green” version of the application or service is provisioned in parallel with the last stable “blue” version running on a separate infrastructure. Once your green is tested and ready, the blue can be de-provisioned.

- In a canary deployment, the deployment is done to fewer nodes first and, after it is tested using those nodes, it will be deployed to all the nodes.

- In-place deployment deploys the code directly to all the live nodes and might incur downtime; however, with rolling updates, you can reduce or remove downtime.

In addition, with the deployment, there are three key automation elements that need to be considered:

- Cloud infrastructure provisioning: Infrastructure management tools like AWS CloudFormation, AWS OpsWorks Stacks and Terraform, help in creating templates and cloning the entire underlying application stack including compute, storage, and network from one environment to another in a matter of a few clicks or API calls.

- Configuration management automation using tools such as Chef, Puppet and AWS OpsWorks can ensure configuration files are in the desired state, including OS-level configuration parameters (file permissions, environment variables, etc.). Over the years these also evolved to automate the whole flow, including code flows and deployment as well as resource orchestration on the infrastructure level.

- Containerization and orchestration: Gone are the days when people have to wait for the server bootstrapping to deploy new versions of code. Containers such as Docker are used to package and scale specific services and include everything that is required to run it. The service packaging can support the isolation required between staging and production and, together with orchestration tools such as Kubernetes, can help automate deployment while reducing risks when moving code across the different environments.

Now that we have covered all stages of the pipeline, let’s looks at a real-life practical use case.

A Pipeline Use Case: 5 Steps

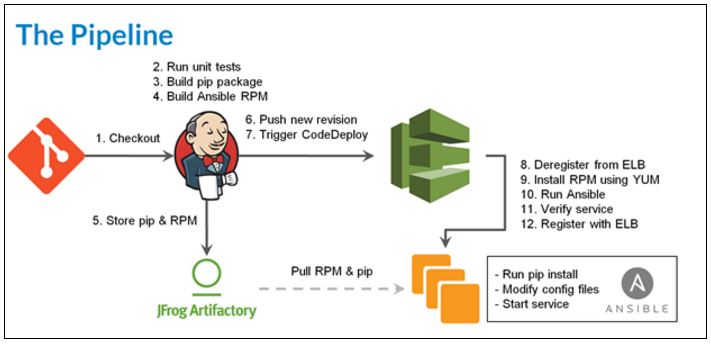

The diagram below represents a build pipeline for deploying a new version of code. In this use case, the processes of CI and CD are implemented using tools such as Jenkins, SCM tools (GIT/SVN, for example), JFrog artifactory, and AWS CodeDeploy.

The pipeline is triggered as soon as someone commits to the repository. Following are the steps in the pipeline:

- Commit. Once a developer commits the code, the Jenkins server polls the SCM tool (GIT/SVN) to get the latest code.

- The new code triggers a new Jenkins job that runs unit tests. The automated unit tests are run using build tools such as Maven or Gradle. The results of these unit tests are monitored. If the unit tests fail, an email is sent to the developer who broke the code and the job exits.

- If the test passes, then the next step is to compile the code. Jenkins is used as an integration tool that packages the application to pip (python) / war (java) file.

- Ansible is then used to create infrastructure and deploy any required configuration packages. JFrog Artifactory is used to store the build packages that will be used as a single binary package for deployments on other different environments.

- Using CodeDeploy, the new release is deployed on the servers. The servers might require deregistration from ELB while code deployment is taking place. The cloud infrastructure or configuration management tools help automate this including install packages and starting different services such as web/app server, DB server, etc.

Conclusion: Automate Everything

Marc Andreessen penned his famous “Why Software Is Eating the World” article five years ago. Today, it is considered to be a cliche that “every company needs to become a software company.”

New innovations running on the cloud don’t require hefty amounts of resources and every small startup can evolve to be the next disrupting force in its market. This leads to a highly competitive landscape in almost every industry and directly creates the need for speed. Time is of the essence and rapid software delivery is the key.

Whether pushing the commit to deploy new versions or reverting back after a failure, automated processes save time, maintain quality, keep consistency, and allow R&D teams to speed and predict their delivery.